Thursday, 30 July 2015

ICSE 2015 and Software Engineering Research at Google

The large scale of our software engineering efforts at Google often pushes us to develop cutting-edge infrastructure. In May 2015, at the International Conference on Software Engineering (ICSE 2015), we shared some of our software engineering tools and practices and collaborated with the research community through a combination of publications, committee memberships, and workshops. Learn more about some of our research below (Googlers highlighted in blue).

Google was a Gold supporter of ICSE 2015.

Technical Research Papers:

A Flexible and Non-intrusive Approach for Computing Complex Structural Coverage Metrics

Michael W. Whalen, Suzette Person, Neha Rungta, Matt Staats, Daniela Grijincu

Automated Decomposition of Build Targets

Mohsen Vakilian, Raluca Sauciuc, David Morgenthaler, Vahab Mirrokni

Tricorder: Building a Program Analysis Ecosystem

Caitlin Sadowski, Jeffrey van Gogh, Ciera Jaspan, Emma Soederberg, Collin Winter

Software Engineering in Practice (SEIP) Papers:

Comparing Software Architecture Recovery Techniques Using Accurate Dependencies

Thibaud Lutellier, Devin Chollak, Joshua Garcia, Lin Tan, Derek Rayside, Nenad Medvidovic, Robert Kroeger

Technical Briefings:

Software Engineering for Privacy in-the-Large

Pauline Anthonysamy, Awais Rashid

Workshop Organizers:

2nd International Workshop on Requirements Engineering and Testing (RET 2015)

Elizabeth Bjarnason, Mirko Morandini, Markus Borg, Michael Unterkalmsteiner, Michael Felderer, Matthew Staats

Committee Members:

Caitlin Sadowski - Program Committee Member and Distinguished Reviewer Award Winner

James Andrews - Review Committee Member

Ray Buse - Software Engineering in Practice (SEIP) Committee Member and Demonstrations Committee Member

John Penix - Software Engineering in Practice (SEIP) Committee Member

Marija Mikic - Poster Co-chair

Daniel Popescu and Ivo Krka - Poster Committee Members

Wednesday, 29 July 2015

L'Oréal Canada finds beauty in programmatic buying

Cross-posted on the DoubleClick Advertiser Blog

While global sales of L'Oréal Luxe makeup brand Shu Uemura were booming, reaching its target audience across North America proved challenging. By collaborating with Karl Lagerfeld (and his cat, Choupette) and using DoubleClick Bid Manager and Google Analytics Premium, the campaign delivered nearly double the anticipated revenue.

| Goals | |

| Approach | |

| Results | |

To learn more about Shu Uemura’s approach, check out the full case study.

Posted by Kelly Cox, Product Marketing, DoubleClick

How Google Translate squeezes deep learning onto a phone

Posted by Otavio Good, Software Engineer, Google Translate

Today we announced that the Google Translate app now does real-time visual translation of 20 more languages. So the next time you’re in Prague and can’t read a menu, we’ve got your back. But how are we able to recognize these new languages?

In short: deep neural nets. When the Word Lens team joined Google, we were excited for the opportunity to work with some of the leading researchers in deep learning. Neural nets have gotten a lot of attention in the last few years because they’ve set all kinds of records in image recognition. Five years ago, if you gave a computer an image of a cat or a dog, it had trouble telling which was which. Thanks to convolutional neural networks, not only can computers tell the difference between cats and dogs, they can even recognize different breeds of dogs. Yes, they’re good for more than just trippy art—if you're translating a foreign menu or sign with the latest version of Google's Translate app, you're now using a deep neural net. And the amazing part is it can all work on your phone, without an Internet connection. Here’s how.

Step by step

First, when a camera image comes in, the Google Translate app has to find the letters in the picture. It needs to weed out background objects like trees or cars, and pick up on the words we want translated. It looks at blobs of pixels that have similar color to each other that are also near other similar blobs of pixels. Those are possibly letters, and if they’re near each other, that makes a continuous line we should read.

Second, Translate has to recognize what each letter actually is. This is where deep learning comes in. We use a convolutional neural network, training it on letters and non-letters so it can learn what different letters look like.

But interestingly, if we train just on very “clean”-looking letters, we risk not understanding what real-life letters look like. Letters out in the real world are marred by reflections, dirt, smudges, and all kinds of weirdness. So we built our letter generator to create all kinds of fake “dirt” to convincingly mimic the noisiness of the real world—fake reflections, fake smudges, fake weirdness all around.

Why not just train on real-life photos of letters? Well, it’s tough to find enough examples in all the languages we need, and it’s harder to maintain the fine control over what examples we use when we’re aiming to train a really efficient, compact neural network. So it’s more effective to simulate the dirt.

The third step is to take those recognized letters, and look them up in a dictionary to get translations. Since every previous step could have failed in some way, the dictionary lookup needs to be approximate. That way, if we read an ‘S’ as a ‘5’, we’ll still be able to find the word ‘5uper’.

Finally, we render the translation on top of the original words in the same style as the original. We can do this because we’ve already found and read the letters in the image, so we know exactly where they are. We can look at the colors surrounding the letters and use that to erase the original letters. And then we can draw the translation on top using the original foreground color.

Crunching it down for mobile

Now, if we could do this visual translation in our data centers, it wouldn’t be too hard. But a lot of our users, especially those getting online for the very first time, have slow or intermittent network connections and smartphones starved for computing power. These low-end phones can be about 50 times slower than a good laptop—and a good laptop is already much slower than the data centers that typically run our image recognition systems. So how do we get visual translation on these phones, with no connection to the cloud, translating in real-time as the camera moves around?

We needed to develop a very small neural net, and put severe limits on how much we tried to teach it—in essence, put an upper bound on the density of information it handles. The challenge here was in creating the most effective training data. Since we’re generating our own training data, we put a lot of effort into including just the right data and nothing more. For instance, we want to be able to recognize a letter with a small amount of rotation, but not too much. If we overdo the rotation, the neural network will use too much of its information density on unimportant things. So we put effort into making tools that would give us a fast iteration time and good visualizations. Inside of a few minutes, we can change the algorithms for generating training data, generate it, retrain, and visualize. From there we can look at what kind of letters are failing and why. At one point, we were warping our training data too much, and ‘$’ started to be recognized as ‘S’. We were able to quickly identify that and adjust the warping parameters to fix the problem. It was like trying to paint a picture of letters that you’d see in real life with all their imperfections painted just perfectly.

To achieve real-time, we also heavily optimized and hand-tuned the math operations. That meant using the mobile processor’s SIMD instructions and tuning things like matrix multiplies to fit processing into all levels of cache memory.

In the end, we were able to get our networks to give us significantly better results while running about as fast as our old system—great for translating what you see around you on the fly. Sometimes new technology can seem very abstract, and it's not always obvious what the applications for things like convolutional neural nets could be. We think breaking down language barriers is one great use.

Today we announced that the Google Translate app now does real-time visual translation of 20 more languages. So the next time you’re in Prague and can’t read a menu, we’ve got your back. But how are we able to recognize these new languages?

In short: deep neural nets. When the Word Lens team joined Google, we were excited for the opportunity to work with some of the leading researchers in deep learning. Neural nets have gotten a lot of attention in the last few years because they’ve set all kinds of records in image recognition. Five years ago, if you gave a computer an image of a cat or a dog, it had trouble telling which was which. Thanks to convolutional neural networks, not only can computers tell the difference between cats and dogs, they can even recognize different breeds of dogs. Yes, they’re good for more than just trippy art—if you're translating a foreign menu or sign with the latest version of Google's Translate app, you're now using a deep neural net. And the amazing part is it can all work on your phone, without an Internet connection. Here’s how.

Step by step

First, when a camera image comes in, the Google Translate app has to find the letters in the picture. It needs to weed out background objects like trees or cars, and pick up on the words we want translated. It looks at blobs of pixels that have similar color to each other that are also near other similar blobs of pixels. Those are possibly letters, and if they’re near each other, that makes a continuous line we should read.

Second, Translate has to recognize what each letter actually is. This is where deep learning comes in. We use a convolutional neural network, training it on letters and non-letters so it can learn what different letters look like.

But interestingly, if we train just on very “clean”-looking letters, we risk not understanding what real-life letters look like. Letters out in the real world are marred by reflections, dirt, smudges, and all kinds of weirdness. So we built our letter generator to create all kinds of fake “dirt” to convincingly mimic the noisiness of the real world—fake reflections, fake smudges, fake weirdness all around.

Why not just train on real-life photos of letters? Well, it’s tough to find enough examples in all the languages we need, and it’s harder to maintain the fine control over what examples we use when we’re aiming to train a really efficient, compact neural network. So it’s more effective to simulate the dirt.

|

| Some of the “dirty” letters we use for training. Dirt, highlights, and rotation, but not too much because we don’t want to confuse our neural net. |

Finally, we render the translation on top of the original words in the same style as the original. We can do this because we’ve already found and read the letters in the image, so we know exactly where they are. We can look at the colors surrounding the letters and use that to erase the original letters. And then we can draw the translation on top using the original foreground color.

Crunching it down for mobile

Now, if we could do this visual translation in our data centers, it wouldn’t be too hard. But a lot of our users, especially those getting online for the very first time, have slow or intermittent network connections and smartphones starved for computing power. These low-end phones can be about 50 times slower than a good laptop—and a good laptop is already much slower than the data centers that typically run our image recognition systems. So how do we get visual translation on these phones, with no connection to the cloud, translating in real-time as the camera moves around?

We needed to develop a very small neural net, and put severe limits on how much we tried to teach it—in essence, put an upper bound on the density of information it handles. The challenge here was in creating the most effective training data. Since we’re generating our own training data, we put a lot of effort into including just the right data and nothing more. For instance, we want to be able to recognize a letter with a small amount of rotation, but not too much. If we overdo the rotation, the neural network will use too much of its information density on unimportant things. So we put effort into making tools that would give us a fast iteration time and good visualizations. Inside of a few minutes, we can change the algorithms for generating training data, generate it, retrain, and visualize. From there we can look at what kind of letters are failing and why. At one point, we were warping our training data too much, and ‘$’ started to be recognized as ‘S’. We were able to quickly identify that and adjust the warping parameters to fix the problem. It was like trying to paint a picture of letters that you’d see in real life with all their imperfections painted just perfectly.

To achieve real-time, we also heavily optimized and hand-tuned the math operations. That meant using the mobile processor’s SIMD instructions and tuning things like matrix multiplies to fit processing into all levels of cache memory.

In the end, we were able to get our networks to give us significantly better results while running about as fast as our old system—great for translating what you see around you on the fly. Sometimes new technology can seem very abstract, and it's not always obvious what the applications for things like convolutional neural nets could be. We think breaking down language barriers is one great use.

Thursday, 16 July 2015

The Thorny Issue of CS Teacher Certification

Posted by Chris Stephenson, Head of Computer Science Education Programs

(Cross-posted on the Google for Education Blog)

There is a tremendous focus on computer science education in K-12. Educators, policy makers, the non-profit sector and industry are sharing a common message about the benefits of computer science knowledge and the opportunities it provides. In this wider effort to improve access to computer science education, one of the challenges we face is how to ensure that there is a pipeline of computer science teachers to meet the growing demand for this expertise in schools.

In 2013 the Computer Science Teachers Association (CSTA) released Bugs in the System: Computer Science Teacher Certification in the U.S. Based on 18 months of intensive Google-funded research, this report characterized the current state of teacher certification as being rife with “bugs in the system” that prevent it from functioning as intended. Examples of current challenges included states where someone with no knowledge of computer science can teach it, states where the requirements for teacher certification are impossible to meet, and states where certification administrators are confused about what computer science is. The report also demonstrated that this is actually a circular problem - States are hesitant to require certification when they have no programs to train the teachers, and teacher training programs are hesitant to create programs for which there is no clear certification pathway.

Addressing the issues with the current teacher preparation and certification system is a complex challenge and it requires the commitment of the entire computer science community. Fortunately, some of this work is already underway. CSTA’s report provides a set of recommendations aimed at addressing these issues. Educators, advocates, and policymakers are also beginning to examine their systems and how to reform them.

Google is also exploring how we might help. We convened a group of teacher preparation faculty, researchers, and administrators from across the country to brainstorm how we might work with teacher preparation programs to support the inclusion of computational thinking into teacher preparation programs. As a result of this meeting, Dr. Aman Yadav, Professor of Educational Psychology and Educational Technology at Michigan State University, is now working on two research articles aimed at helping teacher preparation program leaders better understand what computational thinking is, and how it supports learning across multiple disciplines.

Google will also be launching a new online course called Computational Thinking for Educators. In this free course, educators working with students between the ages of 13 and 18 will learn how incorporating computational thinking can enhance and enrich learning in diverse academic disciplines and can help boost students’ confidence when dealing with ambiguous, complex or open-ended problems. The course will run from July 15 to September 30, 2015.

These kind of community partnerships are one way that Google can contribute to practitioner-centered solutions and help further the computer science education community’s efforts to help everyone understand that computer science is a deeply important academic discipline that deserves a place in the K-12 canon and well-prepared teachers to share this knowledge with students.

(Cross-posted on the Google for Education Blog)

There is a tremendous focus on computer science education in K-12. Educators, policy makers, the non-profit sector and industry are sharing a common message about the benefits of computer science knowledge and the opportunities it provides. In this wider effort to improve access to computer science education, one of the challenges we face is how to ensure that there is a pipeline of computer science teachers to meet the growing demand for this expertise in schools.

In 2013 the Computer Science Teachers Association (CSTA) released Bugs in the System: Computer Science Teacher Certification in the U.S. Based on 18 months of intensive Google-funded research, this report characterized the current state of teacher certification as being rife with “bugs in the system” that prevent it from functioning as intended. Examples of current challenges included states where someone with no knowledge of computer science can teach it, states where the requirements for teacher certification are impossible to meet, and states where certification administrators are confused about what computer science is. The report also demonstrated that this is actually a circular problem - States are hesitant to require certification when they have no programs to train the teachers, and teacher training programs are hesitant to create programs for which there is no clear certification pathway.

Addressing the issues with the current teacher preparation and certification system is a complex challenge and it requires the commitment of the entire computer science community. Fortunately, some of this work is already underway. CSTA’s report provides a set of recommendations aimed at addressing these issues. Educators, advocates, and policymakers are also beginning to examine their systems and how to reform them.

Google is also exploring how we might help. We convened a group of teacher preparation faculty, researchers, and administrators from across the country to brainstorm how we might work with teacher preparation programs to support the inclusion of computational thinking into teacher preparation programs. As a result of this meeting, Dr. Aman Yadav, Professor of Educational Psychology and Educational Technology at Michigan State University, is now working on two research articles aimed at helping teacher preparation program leaders better understand what computational thinking is, and how it supports learning across multiple disciplines.

Google will also be launching a new online course called Computational Thinking for Educators. In this free course, educators working with students between the ages of 13 and 18 will learn how incorporating computational thinking can enhance and enrich learning in diverse academic disciplines and can help boost students’ confidence when dealing with ambiguous, complex or open-ended problems. The course will run from July 15 to September 30, 2015.

These kind of community partnerships are one way that Google can contribute to practitioner-centered solutions and help further the computer science education community’s efforts to help everyone understand that computer science is a deeply important academic discipline that deserves a place in the K-12 canon and well-prepared teachers to share this knowledge with students.

Tuesday, 14 July 2015

Should My Kid Learn to Code?

Posted by Maggie Johnson, Director of Education and University Relations, Google

(Cross-posted on the Google for Education Blog)

Over the last few years, successful marketing campaigns such as Hour of Code and Made with Code have helped K12 students become increasingly aware of the power and relevance of computer programming across all fields. In addition, there has been growth in developer bootcamps, online “learn to code” programs (code.org, CS First, Khan Academy, Codecademy, Blockly Games, etc.), and non-profits focused specifically on girls and underrepresented minorities (URMs) (Technovation, Girls who Code, Black Girls Code, #YesWeCode, etc.).

This is good news, as we need many more computing professionals than are currently graduating from Computer Science (CS) and Information Technology (IT) programs. There is evidence that students are starting to respond positively too, given undergraduate departments are experiencing capacity issues in accommodating all the students who want to study CS.

Most educators agree that basic application and internet skills (typing, word processing, spreadsheets, web literacy and safety, etc.) are fundamental, and thus, “digital literacy” is a part of K12 curriculum. But is coding now a fundamental literacy, like reading or writing, that all K12 students need to learn as well?

In order to gain a deeper understanding of the devices and applications they use everyday, it’s important for all students to try coding. In doing so, this also has the positive effect of inspiring more potential future programmers. Furthermore, there are a set of relevant skills, often consolidated as “computational thinking”, that are becoming more important for all students, given the growth in the use of computers, algorithms and data in many fields. These include:

One way to represent these different skill sets and the students who need them is as follows:

All students need digital literacy, many need computational thinking depending on their career choice, and some will actually do the software development in high-tech companies, IT departments, or other specialized areas. I don’t believe all kids should learn to code seriously, but all kids should try it via programs like code.org, CS First or Khan Academy. This gives students a good introduction to computational thinking and coding, and provides them with a basis for making an informed decision on whether CS or IT is something they wish to pursue as a career.

(Cross-posted on the Google for Education Blog)

Over the last few years, successful marketing campaigns such as Hour of Code and Made with Code have helped K12 students become increasingly aware of the power and relevance of computer programming across all fields. In addition, there has been growth in developer bootcamps, online “learn to code” programs (code.org, CS First, Khan Academy, Codecademy, Blockly Games, etc.), and non-profits focused specifically on girls and underrepresented minorities (URMs) (Technovation, Girls who Code, Black Girls Code, #YesWeCode, etc.).

This is good news, as we need many more computing professionals than are currently graduating from Computer Science (CS) and Information Technology (IT) programs. There is evidence that students are starting to respond positively too, given undergraduate departments are experiencing capacity issues in accommodating all the students who want to study CS.

Most educators agree that basic application and internet skills (typing, word processing, spreadsheets, web literacy and safety, etc.) are fundamental, and thus, “digital literacy” is a part of K12 curriculum. But is coding now a fundamental literacy, like reading or writing, that all K12 students need to learn as well?

In order to gain a deeper understanding of the devices and applications they use everyday, it’s important for all students to try coding. In doing so, this also has the positive effect of inspiring more potential future programmers. Furthermore, there are a set of relevant skills, often consolidated as “computational thinking”, that are becoming more important for all students, given the growth in the use of computers, algorithms and data in many fields. These include:

- Abstraction, which is the replacement of a complex real-world situation with a simple model within which we can solve problems. CS is the science of abstraction: creating the right model for a problem, representing it in a computer, and then devising appropriate automated techniques to solve the problem within the model. A spreadsheet is an abstraction of an accountant’s worksheet; a word processor is an abstraction of a typewriter; a game like Civilization is an abstraction of history.

- An algorithm is a procedure for solving a problem in a finite number of steps that can involve repetition of operations, or branching to one set of operations or another based on a condition. Being able to represent a problem-solving process as an algorithm is becoming increasingly important in any field that uses computing as a primary tool (business, economics, statistics, medicine, engineering, etc.). Success in these fields requires algorithm design skills.

- As computers become essential in a particular field, more domain-specific data is collected, analyzed and used to make decisions. Students need to understand how to find the data; how to collect it appropriately and with respect to privacy considerations; how much data is needed for a particular problem; how to remove noise from data; what techniques are most appropriate for analysis; how to use an analysis to make a decision; etc. Such data skills are already required in many fields.

One way to represent these different skill sets and the students who need them is as follows:

All students need digital literacy, many need computational thinking depending on their career choice, and some will actually do the software development in high-tech companies, IT departments, or other specialized areas. I don’t believe all kids should learn to code seriously, but all kids should try it via programs like code.org, CS First or Khan Academy. This gives students a good introduction to computational thinking and coding, and provides them with a basis for making an informed decision on whether CS or IT is something they wish to pursue as a career.

Monday, 13 July 2015

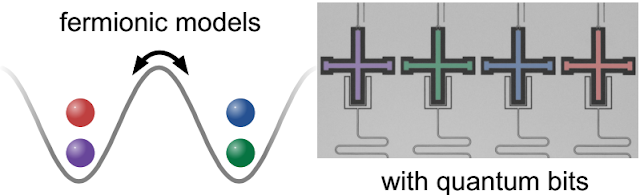

Simulating fermionic particles with superconducting quantum hardware

Posted by Rami Barends and Julian Kelly, Quantum Electronics Engineers and John Martinis, Research Scientist

Digital quantum simulation is one of the key applications of a future, viable quantum computer. Researchers around the world hope that quantum computing will not only be able to process certain calculations faster than any classical computer, but also help simulate nature more accurately and answer longstanding questions with regard to high temperature superconductivity, complex quantum materials, and applications in quantum chemistry.

A crucial part in describing nature is simulating electrons. Without electrons, you cannot describe metals and their conductivity, or the interatomic bonds which hold molecules together. But simulating systems with many electrons makes for a very tough problem on classical computers, due to some of their peculiar quantum properties.

Electrons are fermionic particles, and as such obey the well-known Pauli exclusion principle which states that no fermions in a system can occupy the same quantum state. This is due to a property called anticommutation, an inherent quantum mechanical behavior of all fermions, that makes it very tricky to fully simulate anything that is composed of complex interactions between electrons. The upshot of this anticommutative property is that if you have identical electrons, one at position A and another at position B, and you swap them, you end up with a different quantum state. If your simulation has many electrons you need to carefully keep track of these changes, while ensuring all the interactions between electrons can be completely, yet separately tunable.

Add to that the memory errors caused by fluctuation or noise from their environment and the fact that quantum physics prevents one from directly monitoring the superconducting quantum bits (“qubits”) of a quantum computer directly to account for those errors, and you've got your hands full. However, earlier this year we reported on some exciting steps towards Quantum Error Correction - as it turns out, the hardware we built isn't only useable for error correction, but can also be used for quantum simulation.

In Digital quantum simulation of fermionic models with a superconducting circuit, published in Nature Communications, we present digital methods that enable the simulation of the complex interactions between fermionic particles, by using single-qubit and two-qubit quantum logic gates as building blocks. And with the recent advances in hardware and control we can now implement them.

We took our qubits and made them act like interacting fermions. We experimentally verified that the simulated particles anticommute, and implemented static and time-varying models. With over 300 logic gates, it is the largest digital quantum simulation to date, and the first implementation in a solid-state device.

Dr. Lucas Lamata, M.Sc. Laura García-Álvarez, and Prof. Enrique Solano from the QUTIS group at the University of the Basque Country (UPV/EHU) in Bilbao, Spain, who are experts in constructing algorithms and translating them into the streams of logic gates we can implement with our hardware.

For the future, digital quantum simulation holds the promise that it can be run on an error-corrected quantum computer. But before that, we foresee the construction of larger testbeds for simulation with improvements in logic gates and architecture. This experiment is a critical step on the path to creating a quantum simulator capable of modeling fermions as well as bosons (particles which can be interchanged, as opposed to fermions), opening up exciting possibilities for simulating physical and chemical processes in nature.

Digital quantum simulation is one of the key applications of a future, viable quantum computer. Researchers around the world hope that quantum computing will not only be able to process certain calculations faster than any classical computer, but also help simulate nature more accurately and answer longstanding questions with regard to high temperature superconductivity, complex quantum materials, and applications in quantum chemistry.

A crucial part in describing nature is simulating electrons. Without electrons, you cannot describe metals and their conductivity, or the interatomic bonds which hold molecules together. But simulating systems with many electrons makes for a very tough problem on classical computers, due to some of their peculiar quantum properties.

Electrons are fermionic particles, and as such obey the well-known Pauli exclusion principle which states that no fermions in a system can occupy the same quantum state. This is due to a property called anticommutation, an inherent quantum mechanical behavior of all fermions, that makes it very tricky to fully simulate anything that is composed of complex interactions between electrons. The upshot of this anticommutative property is that if you have identical electrons, one at position A and another at position B, and you swap them, you end up with a different quantum state. If your simulation has many electrons you need to carefully keep track of these changes, while ensuring all the interactions between electrons can be completely, yet separately tunable.

Add to that the memory errors caused by fluctuation or noise from their environment and the fact that quantum physics prevents one from directly monitoring the superconducting quantum bits (“qubits”) of a quantum computer directly to account for those errors, and you've got your hands full. However, earlier this year we reported on some exciting steps towards Quantum Error Correction - as it turns out, the hardware we built isn't only useable for error correction, but can also be used for quantum simulation.

In Digital quantum simulation of fermionic models with a superconducting circuit, published in Nature Communications, we present digital methods that enable the simulation of the complex interactions between fermionic particles, by using single-qubit and two-qubit quantum logic gates as building blocks. And with the recent advances in hardware and control we can now implement them.

We took our qubits and made them act like interacting fermions. We experimentally verified that the simulated particles anticommute, and implemented static and time-varying models. With over 300 logic gates, it is the largest digital quantum simulation to date, and the first implementation in a solid-state device.

Dr. Lucas Lamata, M.Sc. Laura García-Álvarez, and Prof. Enrique Solano from the QUTIS group at the University of the Basque Country (UPV/EHU) in Bilbao, Spain, who are experts in constructing algorithms and translating them into the streams of logic gates we can implement with our hardware.

For the future, digital quantum simulation holds the promise that it can be run on an error-corrected quantum computer. But before that, we foresee the construction of larger testbeds for simulation with improvements in logic gates and architecture. This experiment is a critical step on the path to creating a quantum simulator capable of modeling fermions as well as bosons (particles which can be interchanged, as opposed to fermions), opening up exciting possibilities for simulating physical and chemical processes in nature.

Thursday, 9 July 2015

The Computer Science Pipeline and Diversity: Part 2 - Some positive signs, and looking towards the future

Posted by Maggie Johnson, Director of Education and University Relations, Google

(Cross-posted on the Google for Education Blog)

The disparity between the growing demand for computing professionals and the number of graduates in Computer Science (CS) and Information Technology (IT) has been highlighted in many recent publications. The tiny pipeline of diverse students (women and underrepresented minorities (URMs)) is even more troubling. Some of the factors causing these issues are:

So we are seeing small improvements in K-12 STEM proficiency and undergraduate STEM and CS degrees earned, a significant growth in investment in education innovation, more and more research on the issues of gender and ethnicity in STEM fields and increased opportunities for all students to learn coding skills online, through non-profit programs, through developer boot camps or in their schools.

However, an interesting, and potentially threatening development resulting from this positive momentum is the lack of capacity and faculty in CS departments to handle the increased number of enrollments and majors in CS. Colleges and universities, as a whole, aren’t adequately prepared to handle the surge in CS education demand - Currently there just aren’t enough instructors to teach all the students who want to learn.

This has happened in the past. In the 80’s, with the introduction of the PC, and again during the dot-com boom, interest in CS surged. CS departments managed the load by increasing class sizes as much as they possibly could, and/or they put enrollment caps in place and made CS classes harder. The effect of the former was some faculty left for industry while the effect of the latter was a decrease in the diversity pipeline.

“These kinds of caps have two effects which limit access by women and under-represented minorities:

If we allow the past to repeat itself, we may again find CS faculty leaving for industry and less diversity students going into the field. In addition, unlike the dot-com boom where interest in CS plummeted with the bust, it’s unlikely we will see a decrease in enrollments, particularly in the introductory CS courses. “CS+X”, which represents the application of CS in other fields, is illustrated by the following sample list of interdisciplinary majors in various universities:

At Google, we recently funded a number of universities via our 3X3 award program (3 times the number of students in 3 years), which aims to facilitate innovative, inclusive, and sustainable approaches to address these scaling issues in university CS programs. Our hope is to disseminate and scale the most successful approaches that our university partners develop. A positive development, which was not present when this happened in the past, is the recent innovation in online education and technology. The increase in bandwidth, high-quality content and interactive learning opportunities may help us get ahead of this challenging capacity issue.

1Average mathematics scores for fourth- and eighth-graders in 2013 were 1 point higher than in 2011, and 28 and 22 points higher respectively in comparison to the first assessment year in 1990. Hispanic students made gains in mathematics from 2011 to 2013 at both grades 4 and 8. Fourth- and eighth-grade female students scored higher in mathematics in 2013 than in 2011, but the scores for fourth- and eighth-grade male students did not change significantly over the same period. (Nation’s Report Card)

2The average eighth-grade science score increased two points, from 150 in 2009 to 152 in 2011. Scores also rose among public school students in 16 of 47 states that participated in both 2009 and 2011, and no state showed a decline in science scores from 2009 to 2011. A five-point gain from 2009 to 2011 by Hispanic students was larger than the one-point gain for White students, an improvement that narrowed the score gap between those two groups. Black students scored three points higher in 2011 than in 2009, narrowing the achievement gap with White students. (Nation’s Report Card)

(Cross-posted on the Google for Education Blog)

The disparity between the growing demand for computing professionals and the number of graduates in Computer Science (CS) and Information Technology (IT) has been highlighted in many recent publications. The tiny pipeline of diverse students (women and underrepresented minorities (URMs)) is even more troubling. Some of the factors causing these issues are:

- The historical lack of STEM (Science, Technology, Engineering and Mathematics) capabilities in our younger students; lack of proficiency has had a substantial impact on the overall number of students pursuing technical careers. (PCAST Stem Ed report, 2010)

- On the lack of girls in computing, boys often come into computing knowing more than girls because they have been doing it longer. This can cause girls to lose confidence with the perception that computing is a man’s world. Lack of role models, encouragement and relevant curriculum are additional factors that discourage girls’ participation. (Margolis 2003)

- On the lack of URMs in computing, the best and most enthusiastic minority students are effectively discouraged from pursuing technical careers because of systemic and structural issues in our high schools and communities, and because of unconscious bias of teachers and administrators. (Margolis, 2010)

- Math1 and Science2 results as measured by the National Assessment of Educational Progress (NAEP) have improved slightly since 2009, both in general and for female and minority students.

- Over the last 10 years, there has been an increase in the number of students earning STEM degrees, but the news on women graduates is not as positive.

“Overall, 40 percent of bachelor's degrees earned by men and 29 percent earned by women are now in STEM fields. At the doctoral level, more than half of the degrees earned by men (58 percent) and one-third earned by women (33 percent) are in STEM fields. At the bachelor's degree level, though, women are losing ground. Between 2004 and 2014, the share of STEM-related bachelor's degrees earned by women decreased in all seven discipline areas: engineering; computer science; earth, atmospheric and ocean sciences; physical sciences; mathematics; biological and agricultural sciences; and social sciences and psychology. The biggest decrease was in computer science, where women now earn 18 percent of bachelor's degrees (18 percent). In 2004, women earned nearly a quarter of computer science bachelor's degrees, at 23 percent.” - (U.S. News, 2015)

- There has been a steady growth in investment in education companies, particularly those focused on innovative uses of technology.

- The number of publications in Google Scholar on STEM education that focus on gender issues or minority students has steadily increased over the last several years.

|

| Results from Google Scholar, using “STEM education minority” and “STEM education gender” as search terms |

- Successful marketing campaigns such as Hour of Code and Made with Code have helped raise awareness on the accessibility and importance of coding, and the diverse career opportunities in CS.

- There has been growth in developer bootcamps over the last few years, as well as online “learn to code” programs (code.org, CS First, Khan Academy, Codecademy, Blockly Games, PencilCode, etc.), and an increase in opportunities for K12 students to learn coding in their schools. We have also seen non-profits emerge focused specifically on girls and URMs (Technovation, Girls who Code, Black Girls Code, #YesWeCode, etc.)

- One of the most positive signals has been the growth of graduates in CS over the past few years.

|

| Source: 2013 Taulbee Survey, Computing Research Association |

However, an interesting, and potentially threatening development resulting from this positive momentum is the lack of capacity and faculty in CS departments to handle the increased number of enrollments and majors in CS. Colleges and universities, as a whole, aren’t adequately prepared to handle the surge in CS education demand - Currently there just aren’t enough instructors to teach all the students who want to learn.

This has happened in the past. In the 80’s, with the introduction of the PC, and again during the dot-com boom, interest in CS surged. CS departments managed the load by increasing class sizes as much as they possibly could, and/or they put enrollment caps in place and made CS classes harder. The effect of the former was some faculty left for industry while the effect of the latter was a decrease in the diversity pipeline.

“These kinds of caps have two effects which limit access by women and under-represented minorities:

- First, the students who succeed the most in intro CS are the ones with prior experience.

- Second, creating these kinds of caps creates a perception of CS as a highly competitive field, which is a deterrent to many students. Those students may not even try to get into CS.”

If we allow the past to repeat itself, we may again find CS faculty leaving for industry and less diversity students going into the field. In addition, unlike the dot-com boom where interest in CS plummeted with the bust, it’s unlikely we will see a decrease in enrollments, particularly in the introductory CS courses. “CS+X”, which represents the application of CS in other fields, is illustrated by the following sample list of interdisciplinary majors in various universities:

- Yale: "Computer Science and Psychology is an interdepartmental major..."

- USC: "B.S in Physics/Computer Science for students with dual interests..."

- Stanford: "Mathematical and Computational Sciences for students interested in..."

- Northeastern: "Computer Science/Music Technology dual major for students who want to explore connections between..."

- Lehigh: "BS in Computer Science and Business integrates..."

- Dartmouth: "The M.D.-Ph.D. Program in Computational Biology..."

At Google, we recently funded a number of universities via our 3X3 award program (3 times the number of students in 3 years), which aims to facilitate innovative, inclusive, and sustainable approaches to address these scaling issues in university CS programs. Our hope is to disseminate and scale the most successful approaches that our university partners develop. A positive development, which was not present when this happened in the past, is the recent innovation in online education and technology. The increase in bandwidth, high-quality content and interactive learning opportunities may help us get ahead of this challenging capacity issue.

1Average mathematics scores for fourth- and eighth-graders in 2013 were 1 point higher than in 2011, and 28 and 22 points higher respectively in comparison to the first assessment year in 1990. Hispanic students made gains in mathematics from 2011 to 2013 at both grades 4 and 8. Fourth- and eighth-grade female students scored higher in mathematics in 2013 than in 2011, but the scores for fourth- and eighth-grade male students did not change significantly over the same period. (Nation’s Report Card)

2The average eighth-grade science score increased two points, from 150 in 2009 to 152 in 2011. Scores also rose among public school students in 16 of 47 states that participated in both 2009 and 2011, and no state showed a decline in science scores from 2009 to 2011. A five-point gain from 2009 to 2011 by Hispanic students was larger than the one-point gain for White students, an improvement that narrowed the score gap between those two groups. Black students scored three points higher in 2011 than in 2009, narrowing the achievement gap with White students. (Nation’s Report Card)

Wednesday, 8 July 2015

The Computer Science Pipeline and Diversity: Part 1 - How did we get here?

Posted by Maggie Johnson, Director of Education and University Relations, Google

(Cross-posted on the Google for Education Blog)

For many years, the Computer Science industry has struggled with a pipeline problem. Since 2009, when the number of undergraduate computer science (CS) graduates hit a low mark, there have been many efforts to increase the supply to meet an ever-increasing demand. Despite these efforts, the projected demand over the next seven years is significant.

Even if we are able to sustain a positive growth in graduation rates over the next 7 years, we will only fill 30-40% of the available jobs.

More than 3 in 4 of these 1.3M jobs will require at least a Bachelor’s degree in CS or an Information Technology (IT) area. With our current production of only 16,000 CS undergraduates per year, we are way off the mark. Furthermore, within this too-small pipeline of CS graduates, is an even smaller supply of diverse - women and underrepresented minority (URM) - students. In 2013, only 14% of graduates were women and 20% URM. Why is this lack of representation important?

One fundamental reason is the lack of STEM (Science, Technology, Engineering and Mathematics) capabilities in our younger students. Over the last several years, international comparisons of K12 students’ performance in science and mathematics place the U.S. in the middle of the ranking or lower. On the National Assessment of Educational Progress, less than one-third of U.S. eighth graders show proficiency in science and mathematics. Lack of proficiency has led to lack of engagement in technical degree programs, which include CS and IT.

The lack of proficiency has had a substantial impact on the overall number of students pursuing technical careers, but there have also been shifts resulting from trends and events in the technology sector that compound the issue. For example, we saw an increase in CS graduates from 1997 to the early 2000’s which reflected the growth of the dot-com bubble. Students, seeing the financial opportunities, moved increasingly toward technical degree programs. This continued until the collapse, after which a steady decrease occurred, perhaps as a result of disillusionment or caution.

Importantly, there are additional factors that are minimizing the diversity of individuals, particularly women, pursuing these fields. It’s important to note that there are no biological or cognitive reasons that justify a gender disparity in individuals participating in computing (Hyde 2006). With similar training and experience, women perform just as well as men in computer-related activities (Margolis 2003). But there can be important differences in reinforced predilections and interests during childhood that affect the diversity of those choosing to pursue computer science .

In general, most young boys build and explore; play with blocks, trains, etc.; and engage in activity and movement. For a typical boy, a computer can be the ultimate toy that allows him to pursue his interests, and this can develop into an intense passion early on. Many girls like to build, play with blocks, etc. too. For the most part, however, girls tend to prefer social interaction. Most girls develop an interest in computing later through social media and YouTubers, girl-focused games, or through math, science and computing courses. They typically do not develop the intense interest in computing at an early age like some boys do – they may never experience that level of interest (Margolis 2003).

Thus, some boys come into computing knowing more than girls because they have been doing it longer. This can cause many girls to lose confidence and drive during adolescence with the perception that technology is a man’s world - Both girls and boys perceive computing to be a largely masculine field (Mercier 2006). Furthermore, there are few role models at home, school or in the media changing the perception that computing is just not for girls. This overall lack of support and encouragement keeps many girls from considering computing as a career. (Google white paper 2014)

In addition, many teachers are oblivious to or support the gender stereotypes by assigning problems and projects that are oriented more toward boys, or are not of interest to girls. This lack of relevant curriculum is important. Many women who have pursued technology as a career cite relevant courses as critical to their decision (Liston 2008).

While gender differences exist with URM groups as well, there are compelling additional factors that affect them. Jane Margolis, a senior researcher at UCLA, did a study in 2000 resulting in the book Stuck in the Shallow End. She and her research group studied three very different high schools in Los Angeles, with different student demographics. The results of the study show that across all three schools, minority students do not get the same opportunities. While all of the students have access to basic technology courses (word processor, spreadsheet skills, etc.), advanced CS courses are typically only made available to students who, because of opportunities they already have outside school, need it less. Additionally, the best and most enthusiastic minority students can be effectively discouraged because of systemic and structural issues, and belief systems of teachers and administrators. The result is a small, mostly homogeneous group of students have all the opportunities and are introduced to CS, while the rest are relegated to the “shallow end of computing skills”, which perpetuates inequities and keeps minority students from pursuing computing careers.

These are some of the reasons why the pipeline for technical talent is so small and why the diversity pipeline is even smaller. Over the last two years, however, we are starting to see some positive signs.

(Cross-posted on the Google for Education Blog)

For many years, the Computer Science industry has struggled with a pipeline problem. Since 2009, when the number of undergraduate computer science (CS) graduates hit a low mark, there have been many efforts to increase the supply to meet an ever-increasing demand. Despite these efforts, the projected demand over the next seven years is significant.

|

| Source: 2013 Taulbee Survey, Computing Research Association |

“By 2022, the computer and mathematical occupations group is expected to yield more than 1.3 million job openings. However, unlike in most occupational groups, more job openings will stem from growth than from the need to replace workers who change occupations or leave the labor force.” -Bureau of Labor Statistics Occupational Projection Report, 2012.

More than 3 in 4 of these 1.3M jobs will require at least a Bachelor’s degree in CS or an Information Technology (IT) area. With our current production of only 16,000 CS undergraduates per year, we are way off the mark. Furthermore, within this too-small pipeline of CS graduates, is an even smaller supply of diverse - women and underrepresented minority (URM) - students. In 2013, only 14% of graduates were women and 20% URM. Why is this lack of representation important?

- The workforce that creates technology should be representative of the people who use it, or there will be an inherent bias in design and interfaces.

- If we get women and URMs involved, we will fill more than 30-40% of the projected jobs over the next 7 years.

- Getting more women and URMs to choose computing occupations will reduce social inequity, since computing occupations are among the fastest-growing and pay the most.

One fundamental reason is the lack of STEM (Science, Technology, Engineering and Mathematics) capabilities in our younger students. Over the last several years, international comparisons of K12 students’ performance in science and mathematics place the U.S. in the middle of the ranking or lower. On the National Assessment of Educational Progress, less than one-third of U.S. eighth graders show proficiency in science and mathematics. Lack of proficiency has led to lack of engagement in technical degree programs, which include CS and IT.

“In the United States, about 4% of all bachelor’s degrees awarded in 2008 were in engineering. This compares with about 19% throughout Asia and 31% in China specifically. In computer sciences, the number of bachelor’s and master’s degrees awarded decreased sharply from 2004 to 2007.” -NSF: Higher Education in Science and Engineering.

The lack of proficiency has had a substantial impact on the overall number of students pursuing technical careers, but there have also been shifts resulting from trends and events in the technology sector that compound the issue. For example, we saw an increase in CS graduates from 1997 to the early 2000’s which reflected the growth of the dot-com bubble. Students, seeing the financial opportunities, moved increasingly toward technical degree programs. This continued until the collapse, after which a steady decrease occurred, perhaps as a result of disillusionment or caution.

Importantly, there are additional factors that are minimizing the diversity of individuals, particularly women, pursuing these fields. It’s important to note that there are no biological or cognitive reasons that justify a gender disparity in individuals participating in computing (Hyde 2006). With similar training and experience, women perform just as well as men in computer-related activities (Margolis 2003). But there can be important differences in reinforced predilections and interests during childhood that affect the diversity of those choosing to pursue computer science .

In general, most young boys build and explore; play with blocks, trains, etc.; and engage in activity and movement. For a typical boy, a computer can be the ultimate toy that allows him to pursue his interests, and this can develop into an intense passion early on. Many girls like to build, play with blocks, etc. too. For the most part, however, girls tend to prefer social interaction. Most girls develop an interest in computing later through social media and YouTubers, girl-focused games, or through math, science and computing courses. They typically do not develop the intense interest in computing at an early age like some boys do – they may never experience that level of interest (Margolis 2003).

Thus, some boys come into computing knowing more than girls because they have been doing it longer. This can cause many girls to lose confidence and drive during adolescence with the perception that technology is a man’s world - Both girls and boys perceive computing to be a largely masculine field (Mercier 2006). Furthermore, there are few role models at home, school or in the media changing the perception that computing is just not for girls. This overall lack of support and encouragement keeps many girls from considering computing as a career. (Google white paper 2014)

In addition, many teachers are oblivious to or support the gender stereotypes by assigning problems and projects that are oriented more toward boys, or are not of interest to girls. This lack of relevant curriculum is important. Many women who have pursued technology as a career cite relevant courses as critical to their decision (Liston 2008).

While gender differences exist with URM groups as well, there are compelling additional factors that affect them. Jane Margolis, a senior researcher at UCLA, did a study in 2000 resulting in the book Stuck in the Shallow End. She and her research group studied three very different high schools in Los Angeles, with different student demographics. The results of the study show that across all three schools, minority students do not get the same opportunities. While all of the students have access to basic technology courses (word processor, spreadsheet skills, etc.), advanced CS courses are typically only made available to students who, because of opportunities they already have outside school, need it less. Additionally, the best and most enthusiastic minority students can be effectively discouraged because of systemic and structural issues, and belief systems of teachers and administrators. The result is a small, mostly homogeneous group of students have all the opportunities and are introduced to CS, while the rest are relegated to the “shallow end of computing skills”, which perpetuates inequities and keeps minority students from pursuing computing careers.

These are some of the reasons why the pipeline for technical talent is so small and why the diversity pipeline is even smaller. Over the last two years, however, we are starting to see some positive signs.

- Many students are becoming more aware of the relevance and accessibility of coding through campaigns such as Hour of Code and Made with Code.

- This increase in awareness has helped to produce a steady increase in CS and IT graduates, and there’s every indication this growth will continue.

- More opportunities to participate in CS-related activities are becoming available for girls and URMs, such as CS First, Technovation, Girls who Code, Black Girls Code, #YesWeCode, etc.

Sunday, 5 July 2015

ICML 2015 and Machine Learning Research at Google

Posted by Corinna Cortes, Head, Google Research NY

This week, Lille, France hosts the 2015 International Conference on Machine Learning (ICML 2015), a premier annual Machine Learning event supported by the International Machine Learning Society (IMLS). As a leader in Machine Learning research, Google will have a strong presence at ICML 2015, with many Googlers publishing work and hosting workshops. If you’re attending, we hope you’ll visit the Google booth and talk with the Googlers to learn more about the hard work, creativity and fun that goes into solving interesting ML problems that impacts millions of people. You can also learn more about our research being presented at ICML 2015 in the list below (Googlers highlighted in blue).

Google is a Platinum Sponsor of ICML 2015.

ICML Program Committee

Area Chair - Corinna Cortes & Samy Bengio

IMLS Board Member - Corinna Cortes

Papers:

Learning Program Embeddings to Propagate Feedback on Student Code

Chris Piech, Jonathan Huang, Andy Nguyen, Mike Phulsuksombati, Mehran Sahami, Leonidas Guibas

BilBOWA: Fast Bilingual Distributed Representations without Word Alignments

Stephan Gouws, Yoshua Bengio, Greg Corrado

An Empirical Exploration of Recurrent Network Architectures

Rafal Jozefowicz, Wojciech Zaremba, Ilya Sutskever

Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift

Sergey Ioffe, Christian Szegedy

DRAW: A Recurrent Neural Network For Image Generation

Karol Gregor, Ivo Danihelka, Alex Graves, Danilo Rezende, Daan Wierstra

Variational Inference with Normalizing Flows

Danilo Rezende, Shakir Mohamed

Structural Maxent Models

Corinna Cortes, Vitaly Kuznetsov, Mehryar Mohri, Umar Syed

Weight Uncertainty in Neural Network

Charles Blundell, Julien Cornebise, Koray Kavukcuoglu, Daan Wierstra

MADE: Masked Autoencoder for Distribution Estimation

Mathieu Germain, Karol Gregor, Iain Murray, Hugo Larochelle

Fictitious Self-Play in Extensive-Form Games

Johannes Heinrich, Marc Lanctot, David Silver

Universal Value Function Approximators

Tom Schaul, Daniel Horgan, Karol Gregor, David Silver

Workshops:

Extreme Classification: Learning with a Very Large Number of Labels

Samy Bengio - Organizing Committee

Machine Learning for Education

Jonathan Huang - Organizing Committee

Workshop on Machine Learning Open Source Software 2015: Open Ecosystems

Ian Goodfellow - Program Committee

Machine Learning for Music Recommendation

Philippe Hamel - Invited Speaker

Large-Scale Kernel Learning: Challenges and New Opportunities

Poster - Just-In-Time Kernel Regression for Expectation Propagation

Wittawat Jitkrittum, Arthur Gretton, Nicolas Heess, S.M. Ali Eslami, Balaji Lakshminarayanan, Dino Sejdinovic, Zoltan Szabo

European Workshop on Reinforcement Learning (EWRL)

Rémi Munos - Organizing Committee

David Silver - Keynote

Workshop on Deep Learning

Geoff Hinton - Organizer

Tara Sainath, Oriol Vinyals, Ian Goodfellow, Karol Gregor - Invited Speakers

Poster - A Neural Conversational Model

Oriol Vinyals, Quoc Le

Oral Presentation - Massively Parallel Methods for Deep Reinforcement Learning

Arun Nair, Praveen Srinivasan, Sam Blackwell, Cagdas Alcicek, Rory Fearon, Alessandro De Maria, Vedavyas Panneershelvam, Mustafa Suleyman, Charles Beattie, Stig Petersen, Shane Legg, Volodymyr Mnih, Koray Kavukcuoglu, David Silver

This week, Lille, France hosts the 2015 International Conference on Machine Learning (ICML 2015), a premier annual Machine Learning event supported by the International Machine Learning Society (IMLS). As a leader in Machine Learning research, Google will have a strong presence at ICML 2015, with many Googlers publishing work and hosting workshops. If you’re attending, we hope you’ll visit the Google booth and talk with the Googlers to learn more about the hard work, creativity and fun that goes into solving interesting ML problems that impacts millions of people. You can also learn more about our research being presented at ICML 2015 in the list below (Googlers highlighted in blue).

Google is a Platinum Sponsor of ICML 2015.

ICML Program Committee

Area Chair - Corinna Cortes & Samy Bengio

IMLS Board Member - Corinna Cortes

Papers:

Learning Program Embeddings to Propagate Feedback on Student Code

Chris Piech, Jonathan Huang, Andy Nguyen, Mike Phulsuksombati, Mehran Sahami, Leonidas Guibas

BilBOWA: Fast Bilingual Distributed Representations without Word Alignments

Stephan Gouws, Yoshua Bengio, Greg Corrado

An Empirical Exploration of Recurrent Network Architectures

Rafal Jozefowicz, Wojciech Zaremba, Ilya Sutskever

Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift

Sergey Ioffe, Christian Szegedy

DRAW: A Recurrent Neural Network For Image Generation

Karol Gregor, Ivo Danihelka, Alex Graves, Danilo Rezende, Daan Wierstra

Variational Inference with Normalizing Flows

Danilo Rezende, Shakir Mohamed

Structural Maxent Models

Corinna Cortes, Vitaly Kuznetsov, Mehryar Mohri, Umar Syed

Weight Uncertainty in Neural Network

Charles Blundell, Julien Cornebise, Koray Kavukcuoglu, Daan Wierstra

MADE: Masked Autoencoder for Distribution Estimation

Mathieu Germain, Karol Gregor, Iain Murray, Hugo Larochelle

Fictitious Self-Play in Extensive-Form Games

Johannes Heinrich, Marc Lanctot, David Silver

Universal Value Function Approximators

Tom Schaul, Daniel Horgan, Karol Gregor, David Silver

Workshops:

Extreme Classification: Learning with a Very Large Number of Labels

Samy Bengio - Organizing Committee

Machine Learning for Education

Jonathan Huang - Organizing Committee

Workshop on Machine Learning Open Source Software 2015: Open Ecosystems

Ian Goodfellow - Program Committee

Machine Learning for Music Recommendation

Philippe Hamel - Invited Speaker

Large-Scale Kernel Learning: Challenges and New Opportunities

Poster - Just-In-Time Kernel Regression for Expectation Propagation

Wittawat Jitkrittum, Arthur Gretton, Nicolas Heess, S.M. Ali Eslami, Balaji Lakshminarayanan, Dino Sejdinovic, Zoltan Szabo

European Workshop on Reinforcement Learning (EWRL)

Rémi Munos - Organizing Committee

David Silver - Keynote

Workshop on Deep Learning

Geoff Hinton - Organizer

Tara Sainath, Oriol Vinyals, Ian Goodfellow, Karol Gregor - Invited Speakers

Poster - A Neural Conversational Model

Oriol Vinyals, Quoc Le

Oral Presentation - Massively Parallel Methods for Deep Reinforcement Learning

Arun Nair, Praveen Srinivasan, Sam Blackwell, Cagdas Alcicek, Rory Fearon, Alessandro De Maria, Vedavyas Panneershelvam, Mustafa Suleyman, Charles Beattie, Stig Petersen, Shane Legg, Volodymyr Mnih, Koray Kavukcuoglu, David Silver

Wednesday, 1 July 2015

DeepDream - a code example for visualizing Neural Networks

Posted by Alexander Mordvintsev, Software Engineer, Christopher Olah, Software Engineering Intern and Mike Tyka, Software Engineer

Two weeks ago we blogged about a visualization tool designed to help us understand how neural networks work and what each layer has learned. In addition to gaining some insight on how these networks carry out classification tasks, we found that this process also generated some beautiful art.

We have seen a lot of interest and received some great questions, from programmers and artists alike, about the details of how these visualizations are made. We have decided to open source the code we used to generate these images in an IPython notebook, so now you can make neural network inspired images yourself!

The code is based on Caffe and uses available open source packages, and is designed to have as few dependencies as possible. To get started, you will need the following (full details in the notebook):

Once you’re set up, you can supply an image and choose which layers in the network to enhance, how many iterations to apply and how far to zoom in. Alternatively, different pre-trained networks can be plugged in.

It'll be interesting to see what imagery people are able to generate. If you post images to Google+, Facebook, or Twitter, be sure to tag them with #deepdream so other researchers can check them out too.

Two weeks ago we blogged about a visualization tool designed to help us understand how neural networks work and what each layer has learned. In addition to gaining some insight on how these networks carry out classification tasks, we found that this process also generated some beautiful art.

|

| Top: Input image. Bottom: output image made using a network trained on places by MIT Computer Science and AI Laboratory. |

The code is based on Caffe and uses available open source packages, and is designed to have as few dependencies as possible. To get started, you will need the following (full details in the notebook):

- NumPy, SciPy, PIL, IPython, or a scientific python distribution such as Anaconda or Canopy.

- Caffe deep learning framework (Installation instructions)

Once you’re set up, you can supply an image and choose which layers in the network to enhance, how many iterations to apply and how far to zoom in. Alternatively, different pre-trained networks can be plugged in.

It'll be interesting to see what imagery people are able to generate. If you post images to Google+, Facebook, or Twitter, be sure to tag them with #deepdream so other researchers can check them out too.

Subscribe to:

Comments (Atom)